In order to split the strings of the column in pyspark we will be using split() function. split function takes the column name and delimiter as arguments. Let’s see with an example on how to split the string of the column in pyspark.

- String split of the column in pyspark with an example.

We will be using the dataframe df_student_detail.

String Split of the column in pyspark : Method 1

- split() Function in pyspark takes the column name as first argument ,followed by delimiter (“-”) as second argument. Which splits the column by the mentioned delimiter (“-”).

- getItem(0) gets the first part of split . getItem(1) gets the second part of split

### String Split of the column in pyspark

from pyspark.sql.functions import split

df_states.withColumn("col1", split(col("State_Name"), "-").getItem(0)).withColumn("col2", split(col("State_Name"), "-").getItem(1)).show()

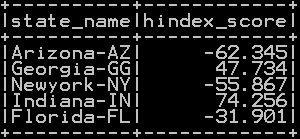

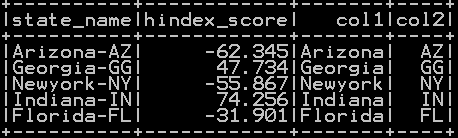

so the resultant dataframe will be

String Split of the column in pyspark : Method 2

split() Function in pyspark takes the column name as first argument ,followed by delimiter (“-”) as second argument. With rdd flatMap() the first set of values becomes col1 and second set after delimiter becomes col2

### String Split of the column in pyspark import pyspark.sql.functions as f df_split = df_states.select(f.split(df_states.state_name,"-")).rdd.flatMap(lambda x: x).toDF(schema=["col1","col2"]) df_split.show()

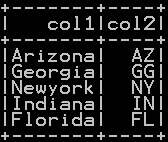

so the splitted column will be

Other Related Topics:

- Remove leading zero of column in pyspark

- Left and Right pad of column in pyspark –lpad() & rpad()

- Add Leading and Trailing space of column in pyspark – add space

- Remove Leading, Trailing and all space of column in pyspark – strip & trim space

- Repeat the column in Pyspark

- Get Substring of the column in Pyspark

- Get String length of column in Pyspark

- Typecast string to date and date to string in Pyspark

- Typecast Integer to string and String to integer in Pyspark

- Extract First N and Last N character in pyspark

- Convert to upper case, lower case and title case in pyspark

- Add leading zeros to the column in pyspark

- Concatenate two columns in pyspark

- Simple random sampling and stratified sampling in pyspark – Sample(), SampleBy()

- Join in pyspark (Merge) inner , outer, right , left join in pyspark

- Get duplicate rows in pyspark

- Quantile rank, decile rank & n tile rank in pyspark – Rank by Group

- Populate row number in pyspark – Row number by Group