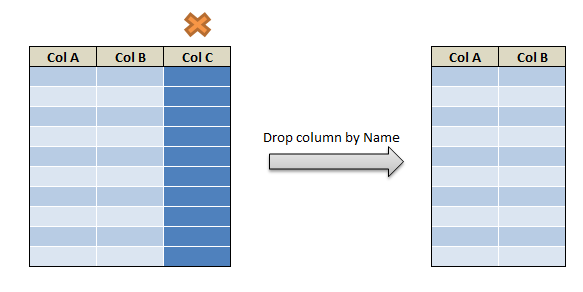

Deleting or Dropping column in pyspark can be accomplished using drop() function. drop() Function with argument column name is used to drop the column in pyspark. drop single & multiple colums in pyspark is accomplished in two ways, we will also look how to drop column using column position, column name starts with, ends with and contains certain character value. Drop columns which has NA , Null values also depicted with example. We will see how to

- Drop single column in pyspark with example

- Drop multiple column in pyspark with example

- Drop column like function in pyspark – drop column name contains a string

- Drop column with column name starts with a specific string in pyspark

- Drop column with column name ends with a specific string in pyspark

- Drop column by column position in pyspark

- Drop column with NA/NaN and null values in pyspark

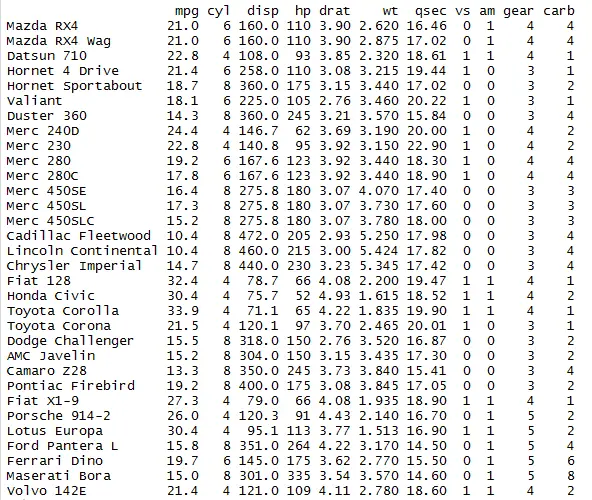

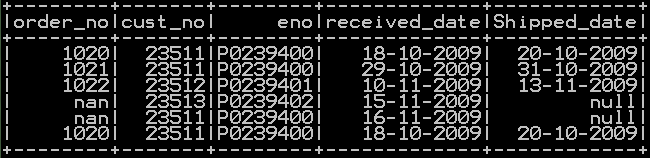

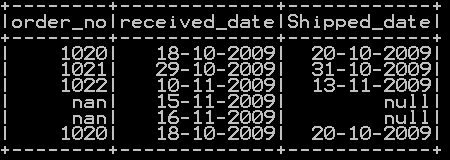

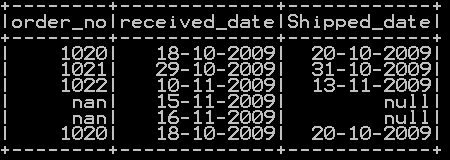

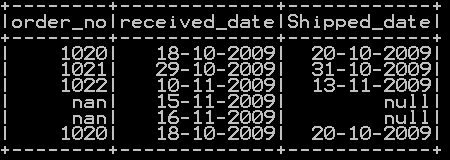

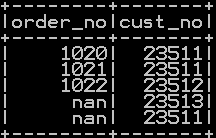

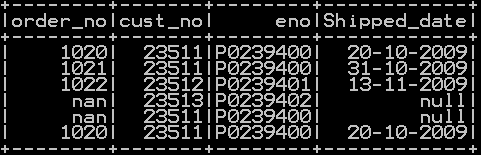

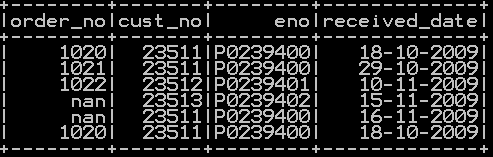

We will be using df_orders.

Drop single column in pyspark – Method 1 :

Drop single column in pyspark using drop() function. Drop function with the column name as argument drops that particular column

## drop single column

df_orders.drop('cust_no').show()

So the resultant dataframe has cust_no column dropped

Drop single column in pyspark – Method 2:

Drop single column in pyspark using drop() function. Drop function with the df.column_name as argument drops that particular column.

## drop single column df_orders.drop(df_orders.cust_no).show()

So the resultant dataframe has “cust_no” column dropped

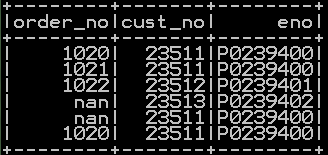

Drop multiple column in pyspark :Method 1

Drop multiple column in pyspark using drop() function. Drop function with list of column names as argument drops those columns.

## drop multiple columns

df_orders.drop('cust_no','eno').show()

So the resultant dataframe has “cust_no” and “eno” columns dropped

Drop multiple column in pyspark :Method 2

Drop multiple column in pyspark using drop() function. List of column names to be dropped is mentioned in the list named “columns_to_drop”. This list is passed to the drop() function.

## drop multiple columns columns_to_drop = ['cust_no', 'eno'] df_orders.drop(*columns_to_drop).show()

So the resultant dataframe has “cust_no” and “eno” columns dropped

Drop multiple column in pyspark :Method 3

Drop multiple column in pyspark using two drop() functions which drops the columns one after another in a sequence with single step as shown below.

## drop multiple columns df_orders.drop(df_orders.eno).drop(df_orders.cust_no).show()

So the resultant dataframe has “cust_no” and “eno” columns dropped

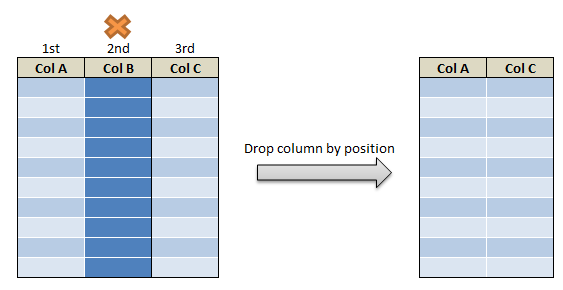

Drop column using position in pyspark:

Dropping multiple columns using position in pyspark is accomplished in a roundabout way . First the list with required columns and rows is extracted using select() function and then it is converted to dataframe as shown below.

## drop multiple columns using position spark.createDataFrame(df_orders.select(df_orders.columns[:2]).take(5)).show()

So the resultant dataframe has “cust_no” and “eno” columns dropped

Drop column name which starts with the specific string in pyspark:

Dropping multiple columns which starts with a specific string in pyspark accomplished in a roundabout way . First the list of column names starts with a specific string is extracted using startswith() function and then it is passed to drop() function as shown below.

## drop multiple columns starts with a string

some_list=df_orders.columns

columns_to_drop = [i for i in some_list if i.startswith('cust')]

df_orders.drop(*columns_to_drop).show()

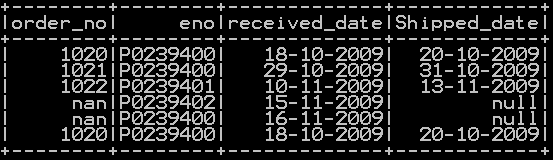

So the column name which starts with “cust” is dropped so the resultant dataframe will be

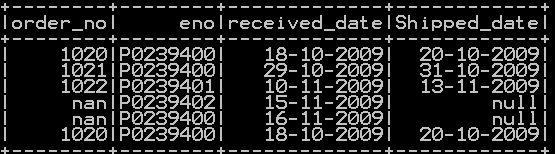

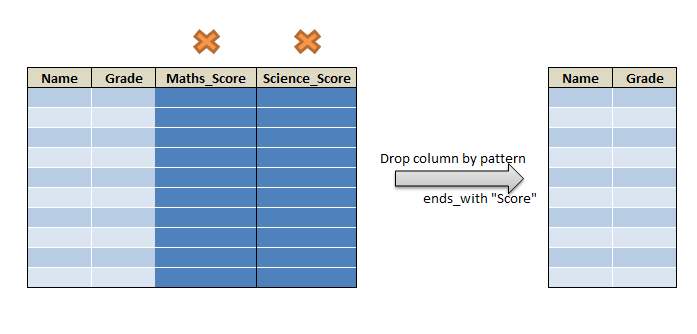

Drop column name which ends with the specific string in pyspark:

Dropping multiple columns which ends with a specific string in pyspark accomplished in a roundabout way . First the list of column names ends with a specific string is extracted using endswith() function and then it is passed to drop() function as shown below.

## drop multiple columns ends with a string

some_list=df_orders.columns

columns_to_drop = [i for i in some_list if i.endswith('date')]

df_orders.drop(*columns_to_drop).show()

So the column name which ends with “date” is dropped so the resultant dataframe will be

Drop column name which contains a specific string in pyspark:

Dropping multiple columns which contains a specific string in pyspark accomplished in a roundabout way . First the list of column names contains a specific string is extracted and then it is passed to drop() function as shown below.

## drop multiple columns ends with a string

some_list=df_orders.columns

columns_to_drop = [i for i in some_list if i.endswith('date')]

df_orders.drop(*columns_to_drop).show()

So the column name which contains “ved” is dropped so the resultant dataframe with “received_date” dropped will be

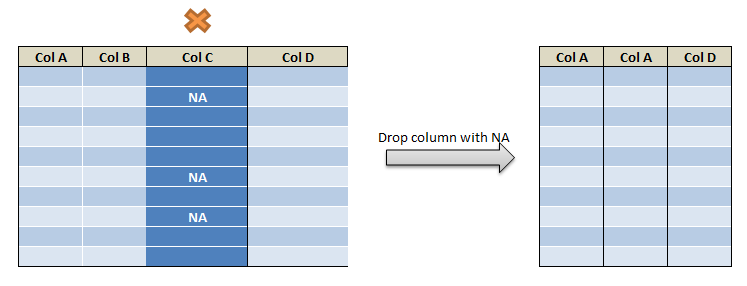

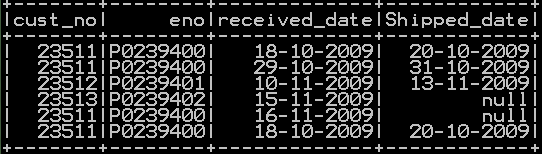

Drop the columns which has Null values in pyspark :

Dropping multiple columns which contains a Null values in pyspark accomplished in a roundabout way by creating a user defined function. column names which contains null values are extracted using isNull() function and then it is passed to drop() function as shown below.

import pyspark.sql.functions as F

def drop_null_columns(df_orders):

"""

This function drops all columns which contain null values.

:param df: A PySpark DataFrame

"""

null_counts = df_orders.select([F.count(F.when(F.col(c).isNull(), c)).alias(c) for c in df_orders.columns]).collect()[0].asDict()

to_drop = [k for k, v in null_counts.items() if v > 0]

df_orders = df_orders.drop(*to_drop)

return df_orders

drop_null_columns(df_orders).show()

So the column name which contains only null values is dropped so the resultant dataframe with column “shipped_date” dropped will be

Drop the columns which has NA/NAN values in pyspark :

Dropping multiple columns which contains NAN/NA values in pyspark accomplished in a roundabout way by creating a user defined function. column names which contains NA/NAN values are extracted using isnan() function and then it is passed to drop() function as shown below.

import pyspark.sql.functions as F

def drop_null_columns(df_orders):

"""

This function drops all columns which contain null values.

:param df: A PySpark DataFrame

"""

null_counts = df_orders.select([F.count(F.when(F.col(c).isNull(), c)).alias(c) for c in df_orders.columns]).collect()[0].asDict()

to_drop = [k for k, v in null_counts.items() if v > 0]

df_orders = df_orders.drop(*to_drop)

return df_orders

drop_null_columns(df_orders).show()

So the column name which contains only NA/NAN values is dropped so the resultant dataframe with column “order_no” dropped will be

also for other function refer the cheatsheet.

Other Related Topics:

- Remove leading zero of column in pyspark

- Left and Right pad of column in pyspark –lpad() & rpad()

- Add Leading and Trailing space of column in pyspark – add space

- Remove Leading, Trailing and all space of column in pyspark – strip & trim space

- String split of the columns in pyspark

- Repeat the column in Pyspark

- Get Substring of the column in Pyspark

- Get String length of column in Pyspark

- Typecast string to date and date to string in Pyspark

- Typecast Integer to string and String to integer in Pyspark

- Extract First N and Last N character in pyspark

- Drop rows in pyspark – drop rows with condition

- Distinct value of a column in pyspark

- Distinct value of dataframe in pyspark – drop duplicates

- Count of Missing (NaN,Na) and null values in Pyspark

- Drop column in pyspark – drop single & multiple columns

- Convert to upper case, lower case and title case in pyspark

- Add leading zeros to the column in pyspark

- Concatenate two columns in pyspark