In order to calculate the percentile rank of the column in pyspark we use percent_rank() Function. percent_rank() function along with partitionBy() of other column calculates the percentile Rank of the column by group. Let’s see an example on how to calculate percentile rank of the column in pyspark.

- Percentile Rank of the column in pyspark using percent_rank()

- percent_rank() of the column by group in pyspark

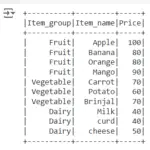

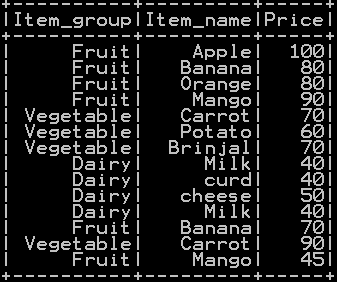

We will be using the dataframe df_basket1

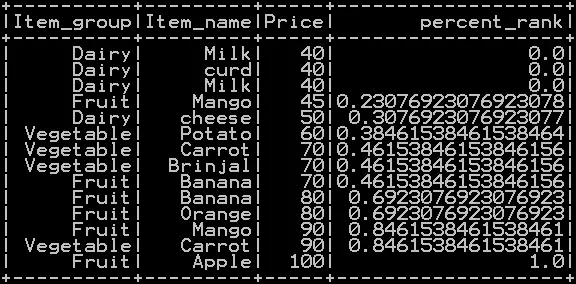

percent_rank() of the column in pyspark:

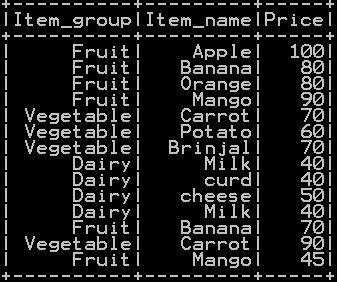

Percentile rank of the column is calculated by percent_rank() function. We will be using partitionBy(), orderBy() functions . partitionBy() function does not take any argument as we are not grouping by any variable. As the result percentile rank is populated and stored in the new column named “percent_rank” as shown below.

### Percentile Rank of the column in pyspark

from pyspark.sql.window import Window

import pyspark.sql.functions as F

df_basket1 = df_basket1.select("Item_group","Item_name","Price", F.percent_rank().over(Window.partitionBy().orderBy(df_basket1['price'])).alias("percent_rank"))

df_basket1.show()

so in the the resultant dataframe percentile rank is calculated and stored in a column as shown below.

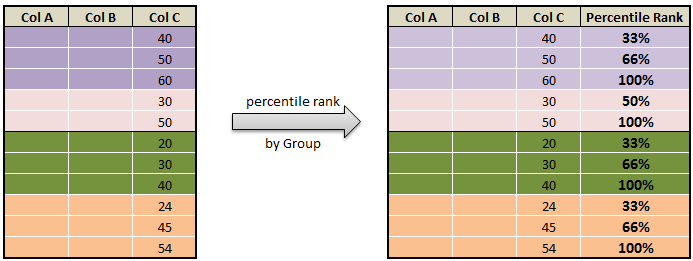

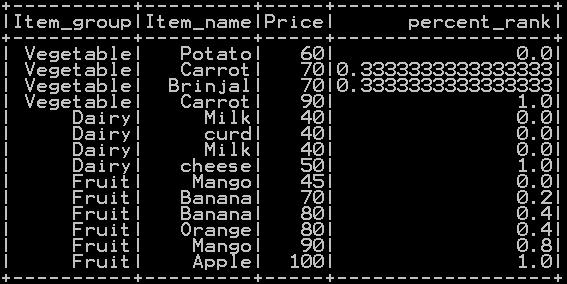

Percentile Rank of the column by group in pyspark:

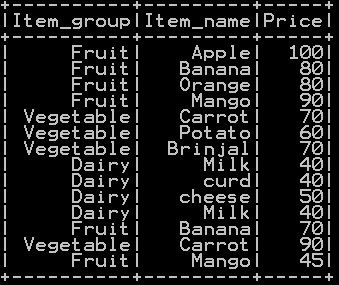

Percentile rank of the column by group is calculated by percent_rank() function. We will be using partitionBy() on “Item_group” column, orderBy() on “Price” column so that Percentile rank will be populated by group here in our case by “Item_group”.

### Percentile Rank of the column by group in pyspark

from pyspark.sql.window import Window

import pyspark.sql.functions as F

df_basket1 = df_basket1.select("Item_group","Item_name","Price", F.percent_rank().over(Window.partitionBy(df_basket1['Item_group']).orderBy(df_basket1['price'])).alias("percent_rank"))

df_basket1.show()

So the resultant dataframe with percentile rank populated by group will be

Other Related Topics:

- Populate row number in pyspark – Row number by Group

- Mean of two or more columns in pyspark

- Sum of two or more columns in pyspark

- Row wise mean, sum, minimum and maximum in pyspark

- Rename column name in pyspark – Rename single and multiple column

- Typecast Integer to Decimal and Integer to float in Pyspark

- Get number of rows and number of columns of dataframe in pyspark

- Extract Top N rows in pyspark – First N rows

- Absolute value of column in Pyspark – abs() function

- Set Difference in Pyspark – Difference of two dataframe

- Union and union all of two dataframe in pyspark (row bind)

- Intersect of two dataframe in pyspark (two or more)

- Round up, Round down and Round off in pyspark – (Ceil & floor pyspark)

- Sort the dataframe in pyspark – Sort on single column & Multiple column