Groupby functions in pyspark which is also known as aggregate function ( count, sum,mean, min, max) in pyspark is calculated using groupby(). Groupby single column and multiple column is shown with an example of each. We will be using aggregate function to get groupby count, groupby mean, groupby sum, groupby min and groupby max of dataframe in pyspark. Let’s get clarity with an example.

- Groupby count of dataframe in pyspark – Groupby single and multiple column

- Groupby sum of dataframe in pyspark – Groupby single and multiple column

- Groupby mean of dataframe in pyspark – Groupby single and multiple column

- Groupby min of dataframe in pyspark – Groupby single and multiple column

- Groupby max of dataframe in pyspark – Groupby single and multiple column

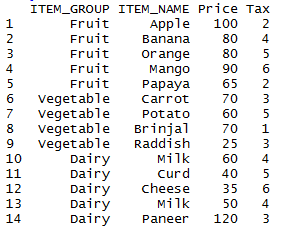

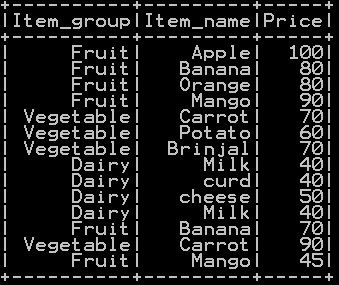

We will use the dataframe named df_basket1

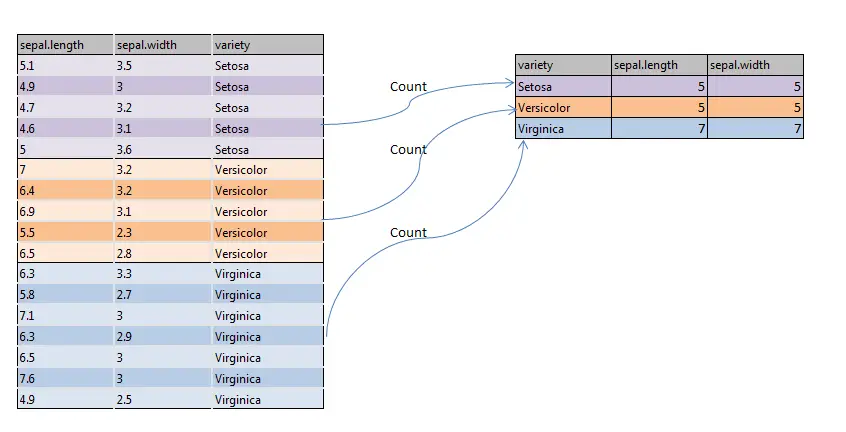

Groupby count of dataframe in pyspark – Groupby single and multiple column:

Groupby count of single column in pyspark :Method 1

Groupby count of dataframe in pyspark – this method uses count() function along with grouby() function.

## Groupby count of single column

df_basket1.groupBy("Item_group").count().show()

Groupby count of single column in pyspark :Method 2

Groupby count of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes column name and count as argument

## Groupby count of single column

df_basket1.groupby('Item_group').agg({'Price': 'count'}).show()

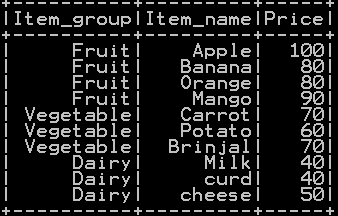

groupby count of “Item_group” column will be

Groupby count of multiple column in pyspark

Groupby count of multiple column of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes list of column names and count as argument

## Groupby count of multiple column

df_basket1.groupby('Item_group','Item_name').agg({'Price': 'count'}).show()

groupby count of “Item_group” and “Item_name” column will be

Groupby sum of dataframe in pyspark – Groupby single and multiple column:

Groupby sum of dataframe in pyspark – Groupby single column

Groupby sum of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes column name and sum as argument

## Groupby sum of single column

df_basket1.groupby('Item_group').agg({'Price': 'sum'}).show()

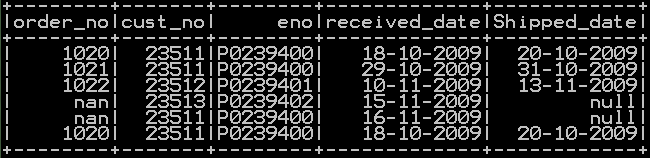

groupby sum of “Item_group” column will be

Groupby sum of dataframe in pyspark – Groupby multiple column

Groupby sum of multiple column of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes list of column names and sum as argument

## Groupby sum of multiple column

df_basket1.groupby('Item_group','Item_name').agg({'Price': 'sum'}).show()

groupby sum of “Item_group” and “Item_name” column will be

Groupby mean of dataframe in pyspark – Groupby single and multiple column:

Groupby mean of dataframe in pyspark – Groupby single column

Groupby mean of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes column name and mean as argument

## Groupby mean of single column

df_basket1.groupby('Item_group').agg({'Price': 'mean'}).show()

groupby mean of “Item_group” column will be

Groupby mean of dataframe in pyspark – Groupby multiple column

Groupby mean of multiple column of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes list of column names and mean as argument

## Groupby mean of multiple column

df_basket1.groupby('Item_group','Item_name').agg({'Price': 'mean'}).show()

groupby mean of “Item_group” and “Item_name” column will be

Groupby min of dataframe in pyspark – Groupby single and multiple column:

Groupby min of dataframe in pyspark – Groupby single column:

Groupby min of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes column name and min as argument

## Groupby min of single column

df_basket1.groupby('Item_group').agg({'Price': 'min'}).show()

groupby min of “Item_group” column will be

Groupby min of dataframe in pyspark – Groupby multiple column:

Groupby min of multiple column of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes list of column names and min as argument

## Groupby min of multiple column

df_basket1.groupby('Item_group','Item_name').agg({'Price': 'min'}).show()

groupby min of “Item_group” and “Item_name” column will be

Groupby max of dataframe in pyspark – Groupby single and multiple column:

Groupby max of dataframe in pyspark – Groupby single column

Groupby max of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes column name and max as argument

## Groupby max of single column

df_basket1.groupby('Item_group').agg({'Price': 'max'}).show()

groupby max of “Item_group” column will be,

Groupby max of dataframe in pyspark – Groupby multiple column

Groupby max of multiple column of dataframe in pyspark – this method uses grouby() function. along with aggregate function agg() which takes list of column names and max as argument

## Groupby max of multiple column

df_basket1.groupby('Item_group','Item_name').agg({'Price': 'max'}).show()

groupby max of “Item_group” and “Item_name” column will be,

Other Related Topics:

- Simple random sampling and stratified sampling in pyspark – Sample(), SampleBy()

- Join in pyspark (Merge) inner , outer, right , left join in pyspark

- Get duplicate rows in pyspark

- Quantile rank, decile rank & n tile rank in pyspark – Rank by Group

- Populate row number in pyspark – Row number by Group

- Percentile Rank of the column in pyspark

- Mean of two or more columns in pyspark

- Sum of two or more columns in pyspark

- Row wise mean, sum, minimum and maximum in pyspark

- Rename column name in pyspark – Rename single and multiple column

- Typecast Integer to Decimal and Integer to float in Pyspark

- Get number of rows and number of columns of dataframe in pyspark

- Extract Top N rows in pyspark – First N rows

- Absolute value of column in Pyspark – abs() function

- Groupby functions in pyspark (Aggregate functions) –count, sum,mean, min, max

- Set Difference in Pyspark – Difference of two dataframe

- Union and union all of two dataframe in pyspark (row bind)

- Intersect of two dataframe in pyspark (two or more)

- Round up, Round down and Round off in pyspark – (Ceil & floor pyspark)

- Sort the dataframe in pyspark – Sort on single column & Multiple column